Testing AWS CDK Stacks on GitHub Actions with LocalStack

Learn how to create a GitHub Action workflow that deploys & tests your CDK Stack with LocalStack's cloud emulator, without creating any AWS resources!

AWS Cloud Development Kit (CDK) is an open-source framework that enables the creation of Infrastructure-as-Code configurations using programming languages like TypeScript, Python, and more. CDK comes with a handy command line interface (CLI) that facilitates direct interaction with the system, allowing users to execute various commands such as deploy, destroy, and synth.

Continuous Integration (CI) environments are commonly used for testing CDK stacks before deploying them on the actual AWS cloud. However, configuring AWS credentials or tearing down the CDK stack post-testing requires manual setups which is often tiresome. LocalStack streamlines integration testing by allowing CDK stack deployment and testing against a cloud emulator.

This blog will guide you in creating a GitHub Action to test CDK stack deployment within a CI workflow. Additionally, we will delve into implementing a basic integration test to verify the functional aspects of the infrastructure deployed on LocalStack.

How does LocalStack work with CDK?

LocalStack runs as a Docker container either on your local machine or in an automated setting. Once started, you can utilize LocalStack alongside tools like AWS CLI or Terraform to create local AWS resources. For local deployment and testing of CDK stacks, LocalStack provides a wrapper CLI called cdklocal for utilizing the CDK library with local APIs.

To set up cdklocal, you can use the npm library with the following commands:

npm install -g aws-cdk-local aws-cdk

...

cdklocal --version

2.121.1

Internally, CDK integrates with AWS CloudFormation for infrastructure deployment and provisioning. When using cdklocal, you leverage a LocalStack-native CloudFormation engine that allows you to create the local resources, hence removing the need to deploy and test on the real cloud.

Prerequisites

GitHub Account &

ghCLI (optional)

Inventory Management System with SQS, Lambda, S3 and DynamoDB

This demo uses a public AWS example to showcase an event-driven inventory management system. The system deploys SQS, DynamoDB, Lambda, and S3, functioning as follows:

CSV files are uploaded to an S3 bucket to centralize and secure the inventory data.

A Lambda function reads and parses the CSV file, extracting inventory update records.

Each record is converted into a message and sent to an SQS queue. Another Lambda function continuously checks the SQS queue for new messages.

Upon receiving the message, it retrieves the inventory update details and updates the inventory levels in DynamoDB.

Create the GitHub Action workflow

GitHub Actions is a tool that automates workflows. It lets you make custom workflows that automatically build, test, and deploy your code when you make changes to your repository.

For this demo, you will implement a workflow that does the following:

Checkout the repository from GitHub.

Perform the steps to install dependencies.

Bootstrap and deploy the CDK stack on the GitHub Action Runner.

Run a basic integration test to verify the functionality.

To start, fork the AWS sample on GitHub. If you use GitHub's gh CLI, fork and clone the repository with this command:

gh repo fork https://github.com/aws-samples/amazon-sqs-best-practices-cdk --clone

After forking and cloning:

Create a new directory called

.githuband a sub-directory calledworkflows.Create a new file called

main.ymlin theworkflowssub-directory.

Now you're ready to create your GitHub Action workflow which will deploy your CDK stack using LocalStack's cloud emulator.

Set Up the Actions & dependencies

To achieve the goal, you can use a few prebuilt Actions:

actions/checkout: Clone the repository for deploying the stacks.setup-localstack: Set up the GitHub Actions workflow with LocalStack container &localstackCLIsetup-node: Set up the GitHub Actions workflow with NodeJS &npm.setup-python: Set up the GitHub Actions workflow with Python &pip.

Add the following content to the main.yml file created earlier:

name: Deploy on LocalStack

on:

push:

branches:

- main

pull_request:

branches:

- main

This ensures that every time a pull request is raised or a new commit is pushed to the main branch, the action is triggered.

Create a new job named cdk and specify the GitHub-hosted runner executing our workflow steps, while checking out the code:

jobs:

cdk:

name: Setup infrastructure using CDK

runs-on: ubuntu-latest

steps:

- name: Checkout the code

uses: actions/checkout@v4

Now, set up the step to install Python & NodeJS in the runner as part of the workflow step:

- name: Setup Node.js

uses: actions/setup-node@v3

with:

node-version: 18

- name: Install Python

uses: actions/setup-python@v4

with:

python-version: '3.10'

Next, set up LocalStack in your runner using the setup-localstack action:

- name: Start LocalStack

uses: LocalStack/setup-localstack@main

with:

image-tag: 'latest'

install-awslocal: 'true'

use-pro: 'true'

configuration: LS_LOG=trace

env:

LOCALSTACK_API_KEY: ${{ secrets.LOCALSTACK_API_KEY }}

This action pulls the LocalStack Pro image (localstack/localstack-pro:latest) image, installs the localstack CLI, and sets up awslocal to redirect AWS API requests to the LocalStack container. A configuration LS_LOG has been added to enable trace log level.

A repository secret LOCALSTACK_API_KEY is also specified to activate your Pro license on the GitHub Actions runner. Later in the article, you will learn the steps to configure the secret in your GitHub repository.

Finally, install other dependencies, such as CDK & cdklocal, and various Python libraries specified in requirements.txt:

- name: Install CDK

run: |

npm install -g aws-cdk-local aws-cdk

cdklocal --version

- name: Install dependencies

run: |

pip install -r requirements.txt

Now, you are ready to deploy the CDK stack on the GitHub Action runner by specifying the appropriate CDK commands in the workflow file.

Deploy the CDK stack on LocalStack

To deploy the CDK stack, employ the cdklocal wrapper. First, ensure that each AWS environment intended for resource deployment is bootstrapped. Execute the following cdklocal bootstrap command, adjusting the AWS account ID (000000000000) and region (us-east-1) as needed:

- name: Bootstrap using CDK

run: |

cdklocal bootstrap aws://000000000000/us-east-1

Note that the account ID and region values can be customized for multi-account and multi-region setups in LocalStack.

Next, confirm correct stack synthesis by running cdklocal synth. If your application includes multiple stacks, specify which ones to synthesize. However, in this case with a single stack, the CDK CLI automatically detects it:

- name: Synthesize using CDK

run: |

cdklocal synth

Following successful synthesis, proceed to deploy the CDK stack with cdklocal deploy. To avoid manual confirmation in non-interactive environments like GitHub Actions, include --require-approval never:

- name: Deploy using CDK

run: |

cdklocal deploy --require-approval never

Implement integration tests against LocalStack

Now, you can implement a straightforward integration test with the following steps:

Validate CDK outputs (

cdk.outandmanifest.json).Query the deployed S3 bucket and DynamoDB table.

Trigger CSV processing by uploading a sample CSV file to the S3 bucket.

Scan the DynamoDB table to confirm inventory updates.

For integration testing, you can use the AWS SDK for Python (boto3) and the pytest framework. Create a new directory called tests and create a file named test_infra.py. Add the necessary imports and pytest fixtures:

import os

import boto3

import pytest

import time

@pytest.fixture

def s3_client():

return boto3.client(

"s3",

endpoint_url="http://localhost:4566",

region_name="us-east-1",

aws_access_key_id="test",

aws_secret_access_key="test",

)

@pytest.fixture

def dynamodb_client():

return boto3.client(

"dynamodb",

endpoint_url="http://localhost:4566",

region_name="us-east-1",

aws_access_key_id="test",

aws_secret_access_key="test",

)

In this code, boto3 clients for interacting with the LocalStack instance are created. Two clients, s3_client and dynamodb_client, are generated, specifying the region and mock AWS Access Key ID and Secret Access Key.

Now, include the following code to execute an integration test against the deployed infrastructure:

def test_cdk(s3_client, dynamodb_client):

# Assert CDK outputs

assert os.path.exists("cdk.out")

assert os.path.exists("cdk.out/manifest.json")

# Check S3 bucket existence

target_bucket_prefix = "sqsblogstack-inventoryupdatesbucketfe-"

response = s3_client.list_buckets()

target_bucket = next(

(

bucket["Name"]

for bucket in response["Buckets"]

if bucket["Name"].startswith(target_bucket_prefix)

),

None,

)

assert target_bucket is not None

local_file_path = "sqs_blog/sample_file.csv"

s3_object_key = "sample_file.csv"

s3_client.upload_file(local_file_path, target_bucket, s3_object_key)

target_ddb_prefix = "SqsBlogStack-InventoryUpdates"

response = dynamodb_client.list_tables()

target_ddb = next(

(

table

for table in response["TableNames"]

if table.startswith(target_ddb_prefix)

),

None,

)

assert target_ddb is not None

time.sleep(10)

# Check if there is at least one item in the DynamoDB table

response = dynamodb_client.scan(TableName=target_ddb)

assert response.get("Count", 0) > 0

This code uploads a sample CSV file (sqs_blog/sample_file.csv) to the local S3 bucket and checks for inserted items in the DynamoDB table. To automate running this test in a GitHub Action workflow, add the following step:

- name: Run integration tests

run: |

pip3 install boto3 pytest

pytest

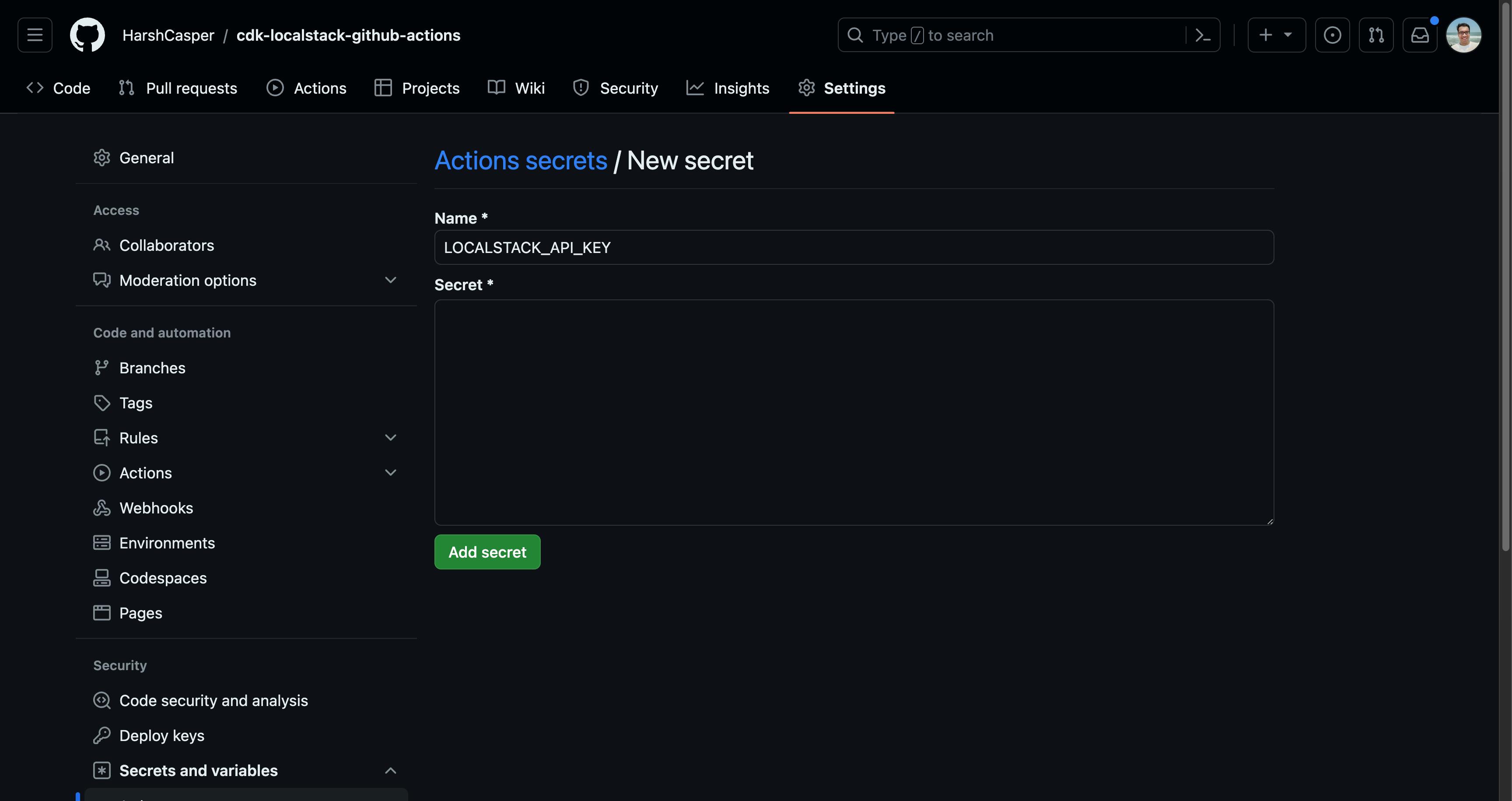

Configure a CI key for GitHub Actions

Before you trigger your workflow, set up a continuous integration (CI) key for LocalStack. LocalStack requires a CI Key for use in CI or similar automated environments.

Follow these steps to add your LocalStack CI key to your GitHub repository:

Go to the LocalStack Web Application and access the CI Keys page.

Switch to the Generate CI Key tab, provide a name, and click Generate CI Key.

In your GitHub repository secrets, set the Name as

LOCALSTACK_API_KEYand the Secret as the CI Key.

Now, you can commit and push your workflow to your forked GitHub repository.

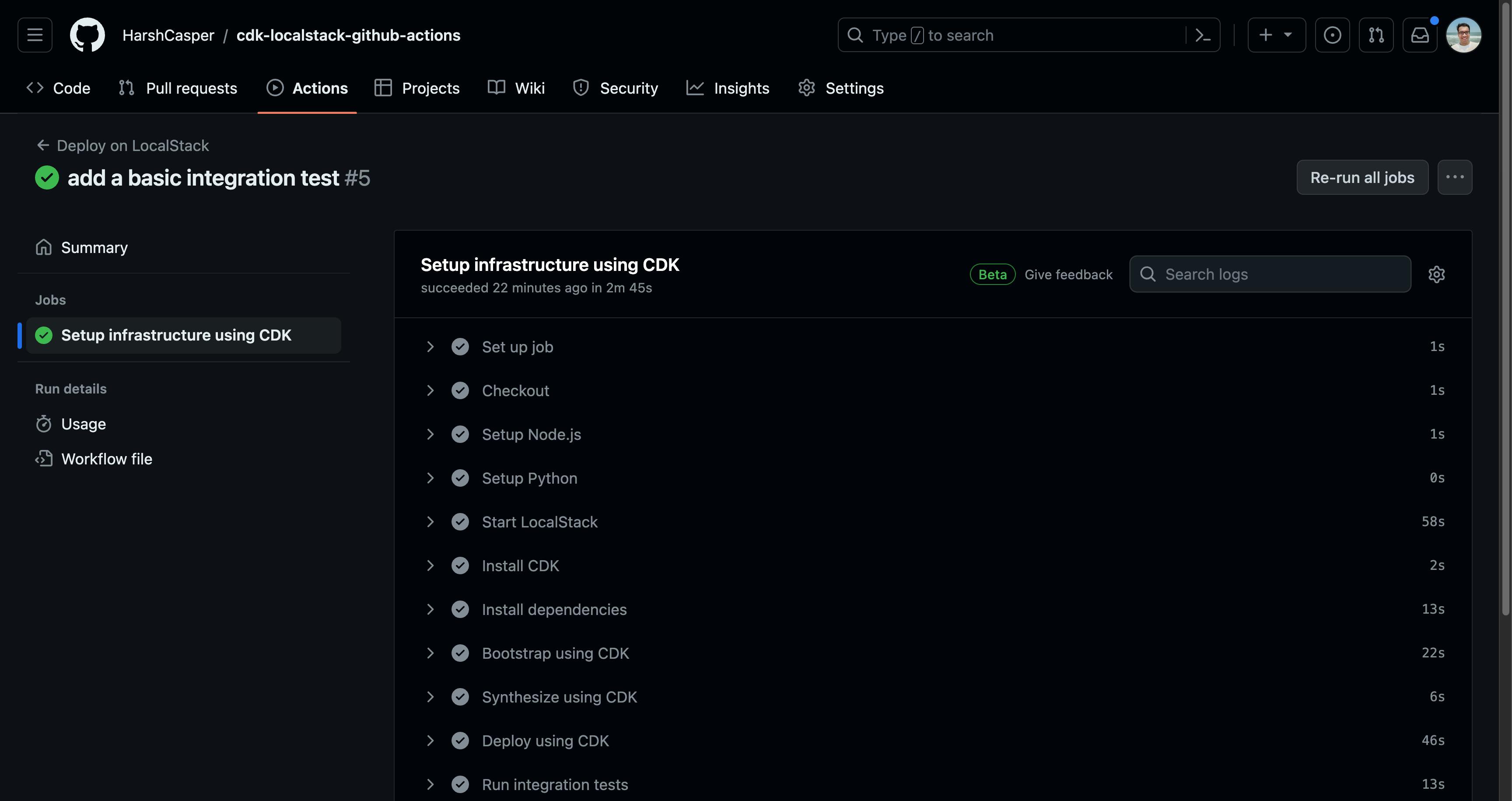

Run the GitHub Action workflow

With the GitHub Action Workflow in place, your CDK stack will be tested and deployed on LocalStack whenever changes are made to the main branch of your GitHub repository. 🎊

If your CDK deployment encounters issues and fails on LocalStack, you can troubleshoot by adding extra steps to generate a diagnostics report. After downloading, you can visualize logs and environment variables using a tool like diapretty:

- name: Generate a Diagnostic Report

if: failure()

run: |

curl -s localhost:4566/_localstack/diagnose | gzip -cf > diagnose.json.gz

- name: Upload the Diagnostic Report

if: failure()

uses: actions/upload-artifact@v3

with:

name: diagnose.json.gz

path: ./diagnose.json.gz

Conclusion

Testing your infrastructure code with LocalStack provides a developer-friendly experience, supporting a quick and agile test-driven development cycle. This happens without incurring any costs on the actual AWS cloud, hence no waiting around prolonged CI runs.

In the upcoming blog posts, we'll demonstrate how to inject your infrastructure state and execute application integration tests without the need for manual deployments (using CDK or Terraform). Stay tuned for more blogs on how LocalStack is enhancing your cloud development and testing experience.

You can find the GitHub Action workflow and integration test in this GitHub repository.